Check the online version, I often update my slides.

Talk detail

Českou verzi přednášky najdete tady.

Let's talk about XSS (yeah, still), and particularly the DOM-based XSS type. This one happens in your browser and in your browser only and luckily, browsers also offer something to put stop to it: Trusted Types. I'll explain how it works and what to expect when hunting for bugs, and why Trusted Types are a Good Thing™️, unlike previous browser-based defense like the XSS Auditor. We'll also talk about Content Security Policy (CSP) reporting, mostly because you enable Trusted Types with a CSP header (yeah, I know). I've also built a demo application so you can have a lot of fun laughing at my CSS skills.

Date and event

May 11, 2023, OWASP Czech Chapter Meeting (talk duration 60 minutes, 20 slides)

Slides

#1 DOM (Document Object Model) XSS (Cross-Site Scripting) is a type of XSS attack that occurs in JavaScript applications running in browsers without the need to deliver malicious JavaScript in the response from the server. Trusted Types, the technology to eventually stop the attack is now available and we should use it (more). Let's talk about the (near) future.

#2 These are the three most common types of XSS:

- Stored XSS (sometimes called “permanent XSS”)

- Reflected (also called “temporary”)

- DOM XSS (sometimes “DOM-based XSS”)

The following is a recapitulation of these variants, but we won't go into too much depth.

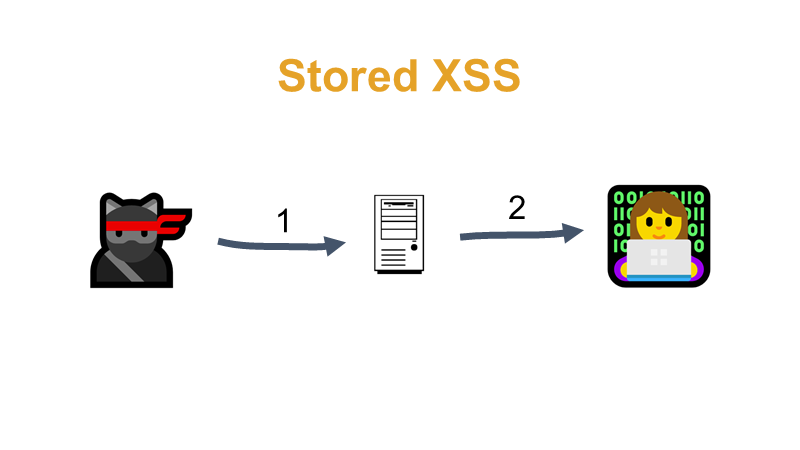

#3 With the Stored variant, the attacker (the ninja cat on the left) stores (step 1) the malicious JavaScript in some storage, typically a database, by editing e.g. the order note, delivery address, etc. and then the application delivers this JavaScript to the browser (step 2) to any user who views a page that outputs this malicious JavaScript to the browser without proper treatment. The JavaScript in the browser can steal cookies, display a fake login form, send HTTP requests and scan the internal network from a browser running inside this network. You can find out what such JavaScript can do in my other lecture on XSS, there's a demo in the video from minute onwards.

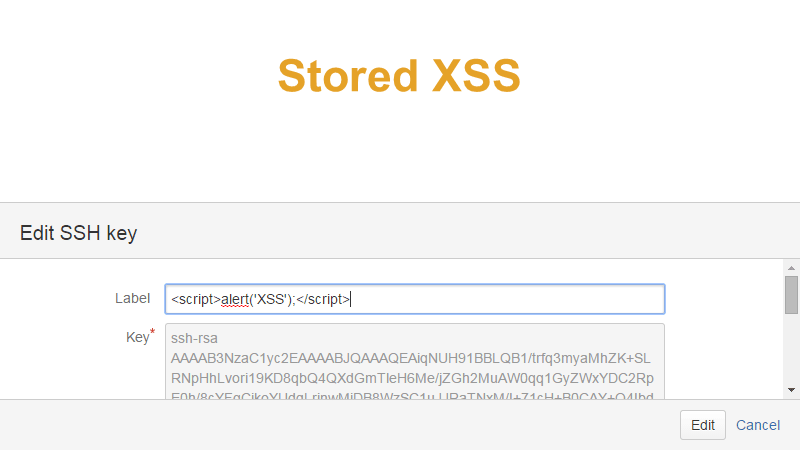

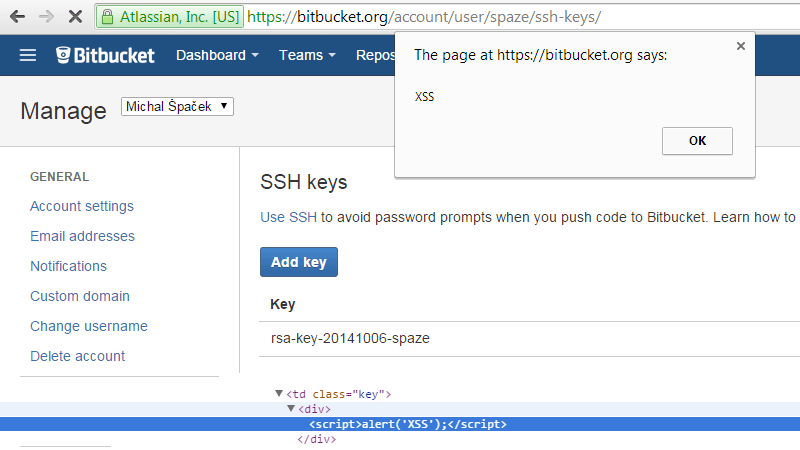

#4 When I have to name something and I'm clueless, I write JS alerts. And I'm clueless more often than I'd like to admit, for example once I had to name a kid but for some reason “Alert Špaček” was rejected by multiple people, not sure why. Honestly, sometimes, probably in most cases, I write JS for a very specific kind of fun than any bug hunting.

#5 And indeed, sometimes I lol and sometimes I rofl. Like when Bitbucket echoed the name of my SSH key into the page exactly as… not really intended. My browser then ran the JavaScript and displayed an alert. I also figured out how this could be exploited against someone other than myself. Such “against myself” attacks are called Self-XSS, and they very often start with “open developer tools and put this code in the console and you'll have the old Facebook (and I'll have your cookies, for example)”. But this wasn't Self-XSS and Atlassian, the developer of Bitbucket, put me on their wall of fame for 2014. On my website, JavaScript is omnipresent, it's in HTTP headers and even DNS records, because why not, everything is user input – in some cases even that can cause a problem, but don't worry, my JS just displays some gifs and videos.

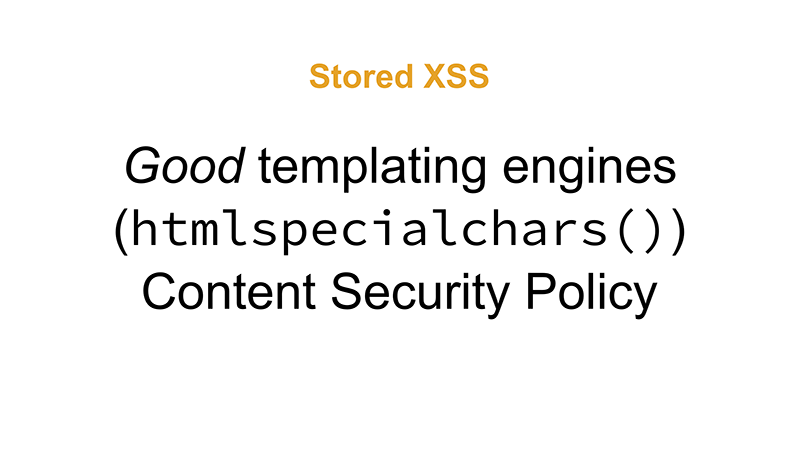

#6 The defense against Stored XSS consists of properly handling dangerous characters on the server using any good templating engines, preferably the one equipped with context-aware auto-escaping. You can also use functions like htmlspecialchars() e.g. (converts < > " ' characters to entities, thus canceling their special meaning for HTML) but be aware that there are many contexts the attacker-provided input might be echoed and each requires different approach. To illustrate the problem, in HTML context, you often don't need to escape single quotes (') unless your attributes are delimited with it of course, but in JavaScript context (e.g. when you're between <script> & </script>), you need to escape single quotes almost always.

(Auto-)escaping can be considered the primary level of defense against XSS, the secondary can be Content Security Policy (CSP). With CSP, you can tell the browser where it can download images and fonts from, which scripts it can run, and where it can submit form data, so that if an attacker were to inject some malicious JavaScript into the page, the browser may still not execute it. But CSP is not the primary level, only an additional one. We won't discuss CSP in detail today, but we'll touch it later. For more information, check out this “talk on CSP”: [link-cs_CZ:Www:Talks:talk xss-php-csp-etc-omg-wtf-bbq-phplive] and here about CSP Level 3.

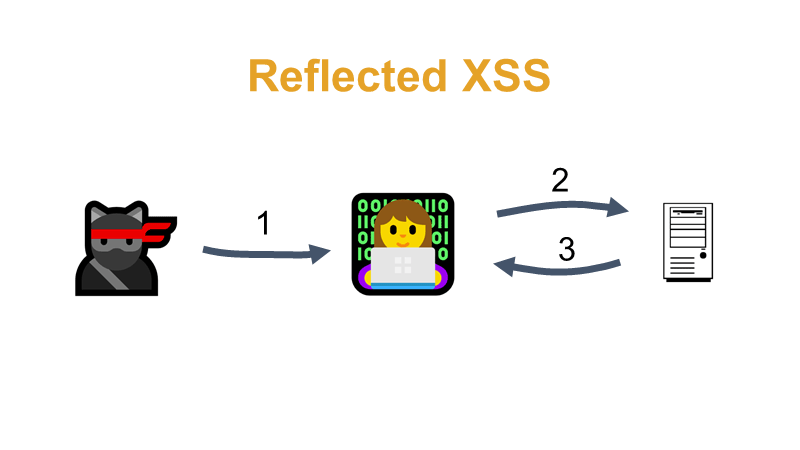

#7 In the Reflected XSS variant, the attacker first makes the user click a link that contains some malicious JavaScript (step 1). When clicked, the malicious JS is transferred to the application (step 2), which echoes it, or reflects it, back to the browser of the user who clicked on the link (step 3). There is a kind of reflection of malicious code from the application back to the browser, hence the name “reflected”. Imagine it, for example, on a page with search results at /search?query=<script>…</script>, which echoes, for example, “Search results for $query”.

#8 The protection against Reflected XSS is basically the same as for Stored XSS: converting dangerous characters to entities on the server with context-aware auto-escaping templating engines using functions like htmlspecialchars() and some more. Content Security Policy as an additional layer of security if the primary one fails, see earlier.

A built-in Reflect XSS protection called XSS Auditor (sometimes “XSS Filter”) was once available in some browsers. The auditor watched for anything that looked like JavaScript in the outgoing request (step 2 on the previous slide), and if it came back from the server as well (in step 3), the XSS Auditor declared it Reflected XSS and, depending on the browser settings or version, either “fixed” the page and displayed it without the sneaky JS, or blocked the page load completely and displayed a full-page warning instead. Sounds like a Good Idea™, except not at all. Sometimes, the XSS Auditor was thought to be a general XSS protection by some developers because they often thought “what security flaw, the browser won't show me any alert, there's no XSS”.

Additionally, XSS Auditor couldn't always recognize that there was JS in the request, and bypasses were quite common and frankly, inevitable. CSP is a better tool and so the Auditor's days were numbered. It was first removed by the original Edge, then by Chrome and all browsers built on Chromium, and eventually by Safari.

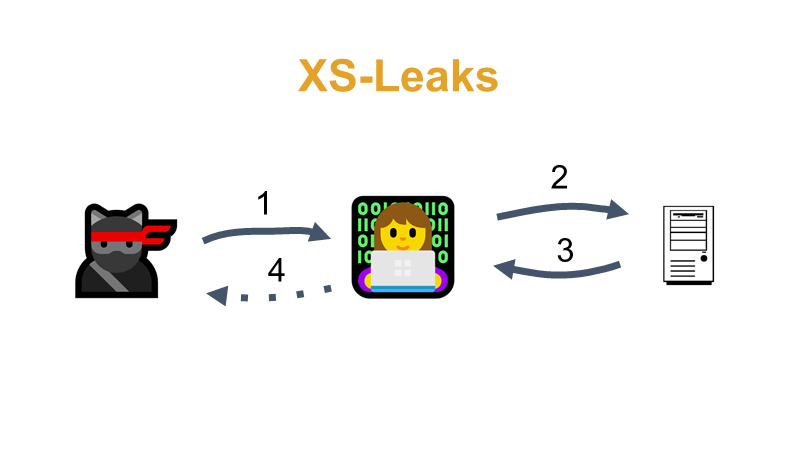

#9 XSS Auditor made it possible to retrieve or search the pages sent to the user by a vulnerable application. Hence the attack name XS-Leaks or XS-Search, where XS stands for “Cross-Site”. Imagine that the application sends some JS code to the user (e.g. var signedin = true) and the attacker wants to find out if the user is logged into such a vulnerable application. Sends a link (1) to the user, e.g. https://example.com/neco?<script>var signedin = true</script>, XSS Auditor detects Reflected XSS (2, 3), blocks loading of the page blocking also an image load for example from an attacker-controlled server. The attacker can also use inserted frames to find out how long the page took to load and guess the presence of blocked JS etc. (4).

#10 Just for reporting XSS bugs in 2015–2016, Google paid 1.2 million USD. Among other things, it sort of signals that XSS attacks are quite a capable thing and can be quite overwhelming.

#11 Just to give you an idea, this is how one million dollars in one dollar bills looks like. The cube is on display in a museum in Chicago (source).

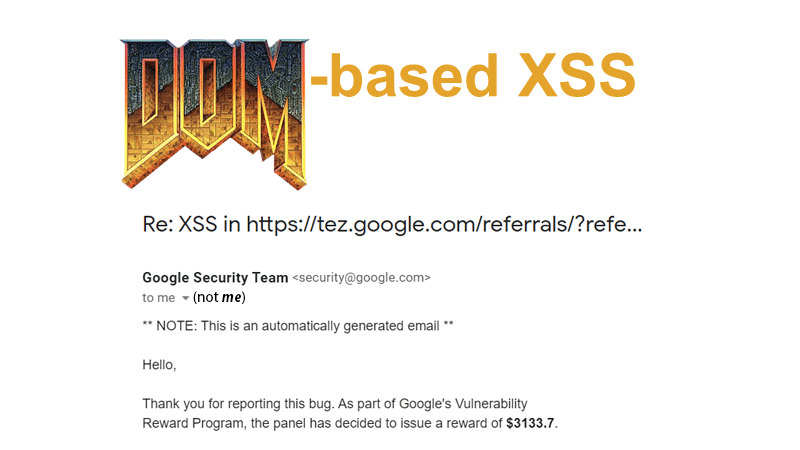

#12 Google doesn't say exactly what the million was paid for, although it might be possible to track it down somewhere. They also pay a lot of money for the third variant: DOM-based XSS. For example here for this bug, Google paid a nice round sum of 3133.7 USD. For Stored and Reflected XSS, Google et al. already proposed a Content Security Policy as an additional layer of security, so it was about time to do something about DOM XSS as well.

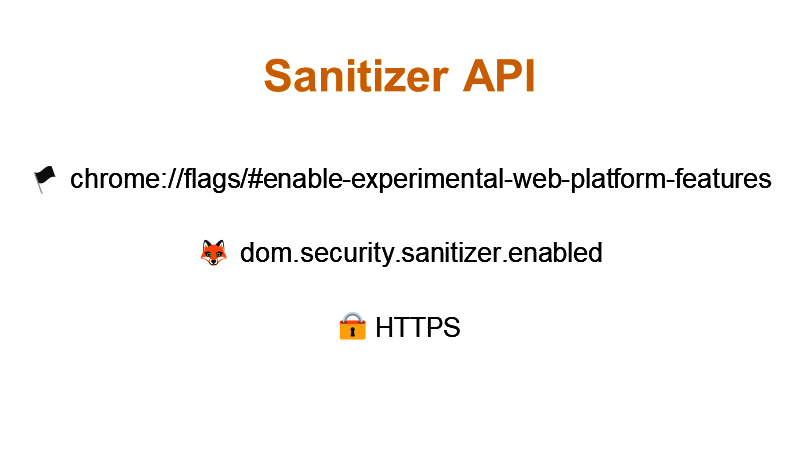

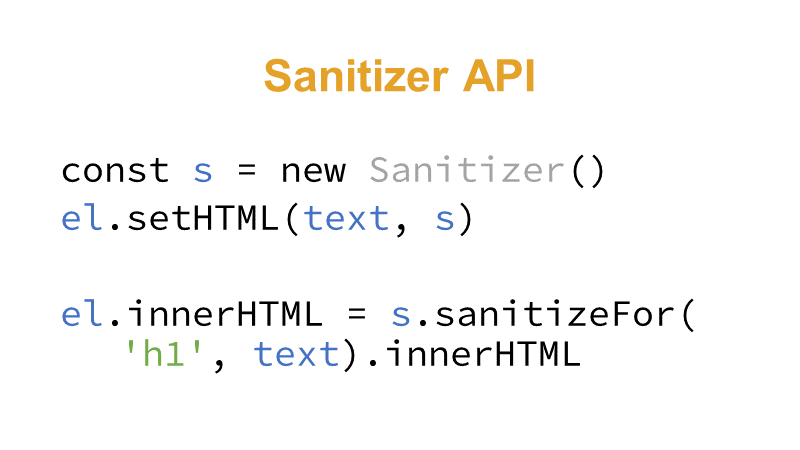

#13 Up until ~now, JavaScript lacked some standard way of escaping characters. You could use string.replaceAll() or a package that would do the same but you have to do something extra. Sanitizer API, developed by Google, Mozilla & Cure53, tries to change that. Currently (May 2023), it's only partially available in Chrome and Chromium-based browsers and you have to enable chrome://flags/#enable-experimental-web-platform-features to get a more complete implementation. Sanitizer API is not yet available in Firefox at all, unless you enable dom.security.sanitizer.enabled in about://config. Sanitizer API is only available on HTTPS pages (for “secure contexts”, respectively). The API is used for “cleaning” HTML, if you want to assign it somewhere in your JS code. or display it in some element on the page, so that someone does not sneak in some malicious JS. The DOMPurify library, coincidentally (or rather not) also from Cure53, is often used for such cleaning too.

#14 Sanitizer has several methods for sanitizing HTML and in addition to that, elements get a new setHTML() method. You can try it anywhere really but let's go to https://example.com/?foo=FOO%3Cimg%20src=x%20onerror=alert(1)%3EBAR.

The foo parameter is not used for anything on the page, we'll only use it to simulate DOM XSS. Load up the link, open developer tools console and run:

const el = document.getElementsByTagName('h1')[0]

const param = new URLSearchParams(location.search).get('foo')

el.innerHTML = paramBy doing this, the contents of the H1 element is replaced by what's in the foo parameter and by directly writing to the innerHTML sink, we've introduced a DOM XSS vulnerability. Let's sanitizerify it by using setHTML():

const s = new Sanitizer()

el.setHTML(param, s)Notice that Sanitizer has removed the dangerous onerror attribute but kept the IMG tag.

The same could be done with sanitizeFor() and innerHTML like this:

el.innerHTML = s.sanitizeFor('h1', param).innerHTMLTo remove all HTML tags you can configure Sanitizer to do so (doesn't work with setHTML(…, s), only with sanitizeFor(), yet?):

const s = new Sanitizer({allowElements:[]})And then all that's left is to go through your code and replace the dangerous things with Sanitizer 😅

Note that currently (May 2023) only setHTML() works in Chrome without setting the flag mentioned earlier, but you'll have to enable it for the other examples to work.

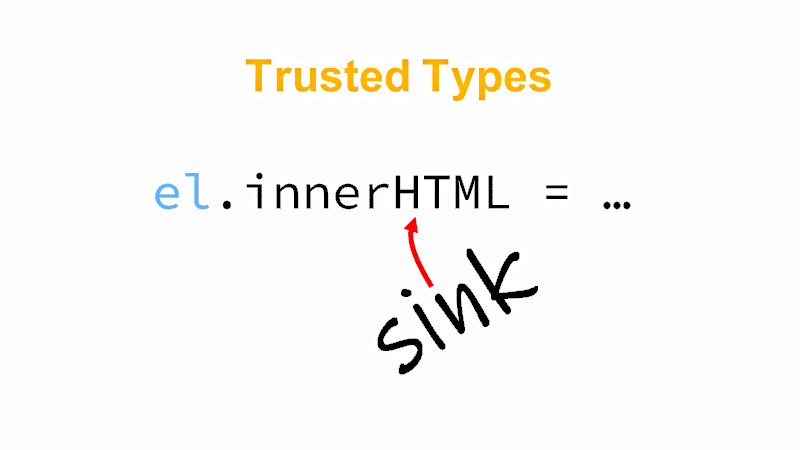

#15 DOM-based XSS happens when a raw, unescaped or unsanitized input is written into the so-called sinks. One such sink is the innerHTML property, another is e.g. the eval() function, both of which can run any JavaScript (between SCRIPT tags and in onerror attributes, etc.) Trusted Types can ensure that such arbitrary JS does not run.

#16 Only Chrome supports Trusted Types at the moment (May 2023) and you don't need to enable it, it's supported out-of-the-box. You can use a polyfill) to have Trusted Types available in other browsers as well. The technology can disable writing arbitrary strings to sinks and requires you to use objects of class TrustedHTML instead. Trusted Types will help us prove that we're using safe writes because the unsafe ones can't even be used. We can create TrustedHTML objects either manually, or we can leave it all to automagic. You can also use Trusted Types to just find the sinks, try it on my demo site. Notice the CSP header which enables Trusted Types by using the require-trusted-types-for 'script' directive. Also notice the “report-only” CSP variant which ensures that the page will load normally, but only reports will be sent. Click to see the code and you'll see the innerHTML sink write. Use the Enter any HTML prompt window to actually write something into that sink, which the browser will happily do, but it will both complain to the dev tools console and send a report you can see under the Reports link.

#17 In the next step, we will try to require the TrustedHTML object, otherwise we will not allow writing, try it on the “next demo page”: https://canhas.report/trusted-types – notice the CSP header without report-only, policy creation using trustedTypes.createPolicy and then using it to write to innerHTML.

Trusted Types leave the escaping up to you, you can use DOMPurify, Sanitizer or plain string.replaceAll(). This means that using Trusted Types as a defense against DOM-based XSS is only as good as your escaping code is. When you try to write a plain string into a sink (the Enter any HTML button also tries this), it fails and a report is sent. If you don't want to create TrustedHTML objects manually, you can create an escape policy with the name default: trustedTypes.createPolicy('default', …) and it will then be used every time you write to a sink. In this case, however, make sure your escaping is pretty solid meaning e.g. you'll escape properly in all contexts. The use of the default policy can be seen on the “next page”: https://canhas.report/…fault-policy.

I really like this automation and provability and look forward to seeing it become even more usable. Nowadays, a lot of libraries write arbitrarily to sinks, so Trusted Types often can't even run in that “report-only” mode. For example I had to replace the syntax highlighter library used in my Trusted Types demo pages with naive server-based string highlighting. The lib I've replaced, highlight.js, actually writes to innerHTML and even just loading that demo page generated two reports. But hopefully the developers will sort this out sometime soon.

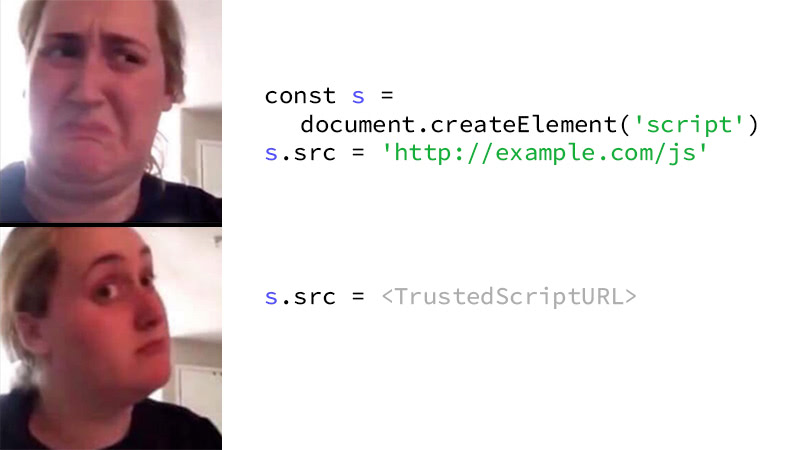

#18 This sink may be a bit unexpected. If you create a SCRIPT element when using Trusted Types, the src property won't accept a plain string and you have to pass a TrustedScriptURL object instead. This is because passing a plain string, possibly a user input, may eventually end up with the browser loading a JS from an attacker-controlled origin for example.

Let's test it out on a previously used Trusted Types demo page. Load the page and open devtools console and enter:

const script = document.createElement('script');Now when you try loading an external file by running:

script.src = 'http://example.com/foo.js';you'll get an error message saying “This document requires ‘TrustedScriptURL’ assignment” and a report will be sent, check that by clicking the “Reports” link. The object would be created anyway, which you can confirm by just typing script, the variable name, and hitting Enter, and that's because that particular page is using Content-Security-Policy-Report-Only header, not the “Report-Only” suffix.

Let's create a policy like this:

const policy = trustedTypes.createPolicy('LePolicy', {

createHTML: (string) => string.replace(/>/g, "<"),

createScriptURL: (string) => string.replace('http://', 'https://'),

});Notice that we have reused an existing code and only added one new method, createScriptURL. For simplicity, this one will only upgrade the protocol from http:// to https://, in real apps you'd probably check or rewrite the origin or something like that. Again, this check is completely up to you, similar to what your createHTML method contains is also your decision.

Use the method to create the TrustedScriptURL object and assign it to the src property:

script.src = policy.createScriptURL('http://example.com/foo.js');Confirm the protocol upgrade once again by typing script and hitting Enter.

You can also use a default policy, reload the page and run this:

const script = document.createElement('script');

trustedTypes.createPolicy('default', {

createHTML: (string) => string.replace(/>/g, "<"),

createScriptURL: (string) => string.replace('http://', 'https://'),

});

script.src = 'http://example.com/foo.js';Last but not least confirm the upgrade by typing script and hitting Enter.

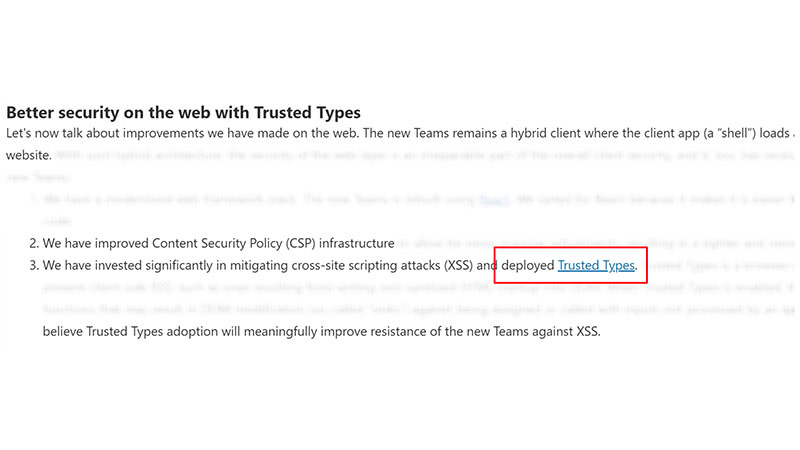

#19 For at least the past 10 or so years, we're seeing many new desktop applications being built using web technologies like HTML and JavaScript. This is cool, but it also brings many modern problems. Because suddenly, attacks like XSS, once known to be a web-based thing, can now be used to attack desktop users too. Cross-platform, ftw!

As demonstrated by Microsoft, modern problems require modern solutions. Their new desktop Teams client is basically a website which allowed them to use Trusted Types and I think it's pretty cool they use a web-based defense mechanism against a web-based attack. In a desktop app.

#20 I really like the approach Trusted Types bring, it's something you can call a provable security: provided your escaping policy is fine, you can prove that all your sinks are fine too, because there's no other way to write into them, the writes will always be escaped. I (want to) believe this means the end of DOM-based XSS, just like context-aware auto-escaping templating systems mean the end of regular XSS, but please remind me of this in 10 years.